TL;DR

Computers use words too! We will explain what is a computer word. The post is aligned with the Black Box Software Testing Foundations course (BBST) designed by Rebecca Fiedler, Cem Kaner, and James Bach.

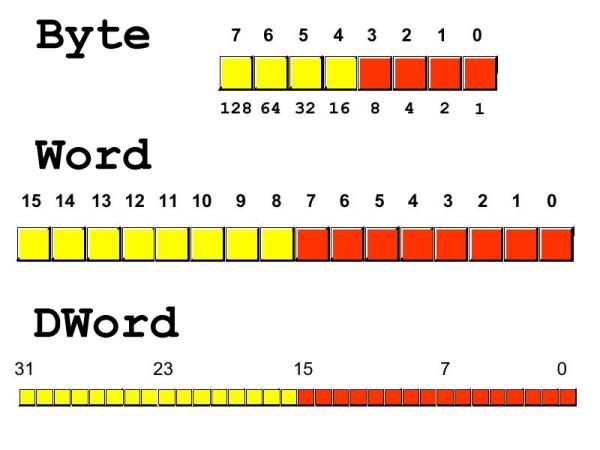

Computer (actually computer processor), reads memory several bits at the time. A word is the amount of memory typically read in one fetch operation.

Apple 2 computer had a word size of 1 byte. First IBM personal computer had word size of 16 bits. My MacBook Pro has a word size of 64 bits.

When software tester tests its application, it uses the default heuristic that computer always correctly reads a word number of bytes from the memory.

Integer

Each operating system has its own word size for Integer representation. macOS High Sierra uses 64 bits for Integers which gives following interval of signed integers:

Min = -9,223,372,036,854,775,808

Max = 9,223,372,036,854,775,807

Floating Point Numbers

We can use single and double point precision.

Here is how we use 32 bits for single-point precision:

- left most bit is the sign bit

- The next 8 bits are for exponent

- The next 23 bits are mantissa.

That gives us following decimal range: 1.175494351 x 10-38 to 3.402823466 x 10+38

Here is how we use 64 bits for double-point precision:

- The leftmost bit is a sign

- Next, eleven bits is an exponent

- The next 52 bits are mantissa.

What is the decimal range for double precision? The first correct answer in comments (with uploaded calculation image as a proof of work) will get the book: “Lessons Learned In Software Testing”.