Writing time: 3.5 hours

TL;DR

This is report about my learning experience on second day of European Testing Conference

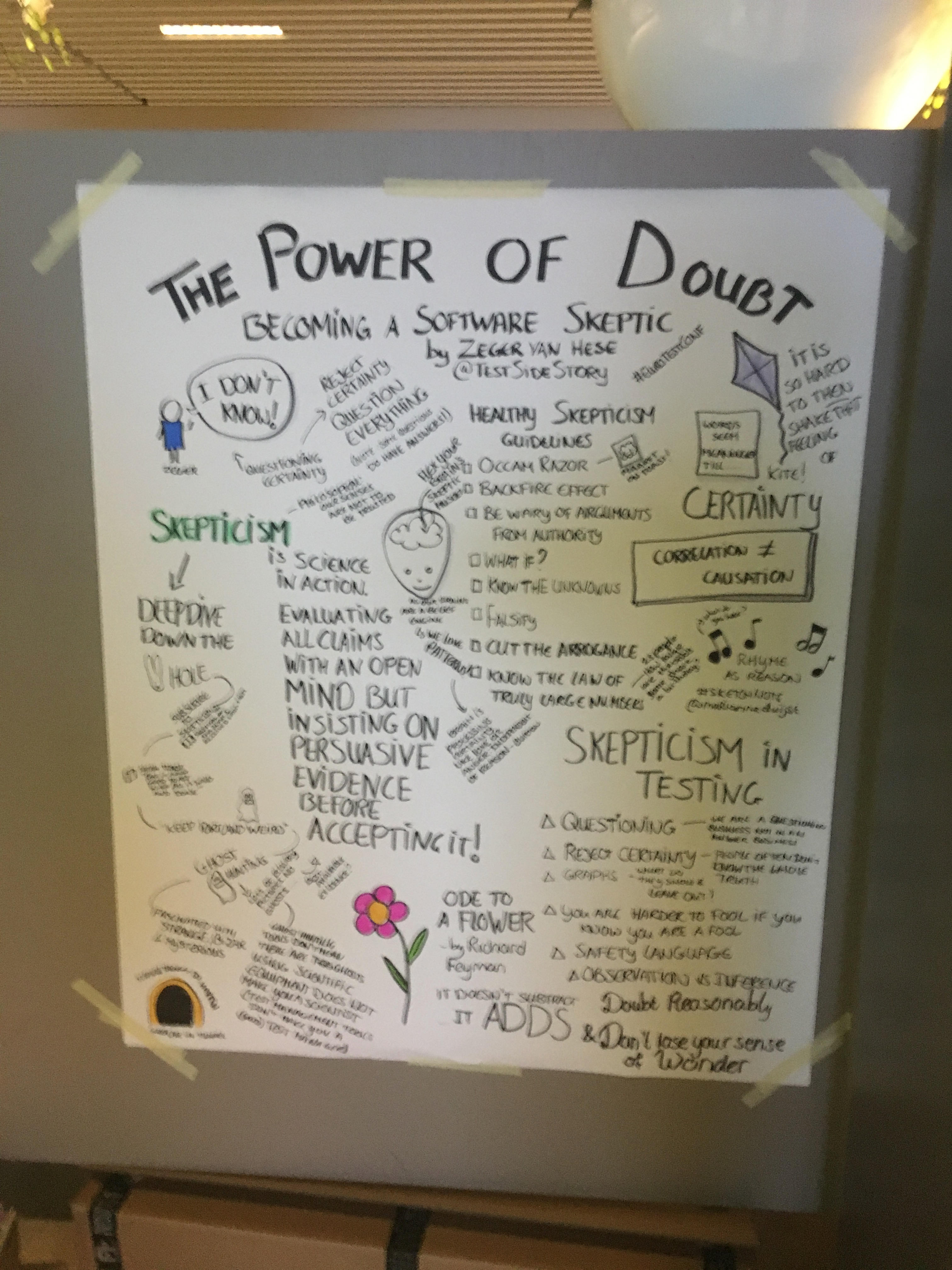

Second day morning keynote started right on schedule (another big plus for the conference). Zeger Van Hese talked about The power of doubt – Becoming a software sceptic.

In order to become a skeptic, you first need to embrace fact that it is safe to admit: I do not know! But that statement needs to be followed by series of clever questions that will help you to learn. Some questions do have an answer.

[tweet id=”965861580687765504″ align=”center”]

But when you question everything, that must not be gunshot questioning, it must be based on scientific method. So there are heuristics for healthy skepticism, or skeptic toolkit.

# Occam’s Razor. I wrote about Occam’s Razor in one of my previous posts: How I discovered new heuristic by reading fiction book. Occam’s Razor is a problem-solving principle devised by William of Ockham (c. 1287–1347). It states that among competing hypotheses, the one with the fewest assumptions should be selected. For example, right now I can hear some noise in my office on the right. Is it a ghost (assumption dead people decided to visit my office), or are those heating pipes?

# Backfire effect. This hypothesis must be true because it is my idea.

# Arguments from authority. This hypothesis is true because it comes from authority body.

# What if I am wrong? Start questioning with yourself.

# Know the unknowns. Say I do not know and start questioning!

# Falsify. Prove that other hypothesis is wrong.

# Cut the arrogance. This is self descriptive.

# Law of truth through large numbers. We should be careful with fancy infographics, because they will show you what you want to see.

Takeaways

Not knowing is good.

Testing is question business. Ask context free questions, then question the questions.

You will never know the whole truth.

You are harder to fool if you know that you are a fool.

Use safety language, might, could, so far.

Observation is data gathering and inference is making conclusions from those data.

And we got skeptic manifesto:

[tweet id=”965874066858143744″ align=”center”]

# Workshop #1 by Vernon Richards: Scripted testing vs Exploratory testing.

[tweet id=”965896525674176512″ align=”center”]

We had 1.5 hour for this worksop. To be aligned with code of conduct, Vernon used simple heuristic to create groups on random basis. We made half cycle around him, based on our birthday month. Then he assigned to each of us group number. Number of groups was seven, calculated based on the number of participants.

Vernon intention was to give us a chance to compare scripted and exploratory testing on real application (created by Troy Hunt for its Pluralsight security courses).

We first tested registration feature using six tests cases.

Then we tested without test cases, now knowing the feature.

Third exercise were testing charters, directed exploratory testing: try to find security issues, input type issues or input utf-8 issues.

At the end of every session, we gave to Vernon our pros and cons for every session.

Takeaways

# There is no better way, sometimes you need to do scripted testing too.

# Exploratory testing could look like free clicking. You must take test session notes, otherwise you will forget what you tested.

# There is structure in exploratory testing, they are created with charters.

It is important to state that every testing is exploratory, otherwise it is not testing.

If you do not know how to bootstrap exploratory testing learning, I strongly advise that you catch this workshop!

# Workshop #2 Alex Schladebeck Exploratory testing in action

Alex presented 45 minute exploratory testing session.

Application is Lufthansa book multiflight flight feature.

Charter: Function tour with finding risks that customer will miss connecting flight because of the application.

She promised results in mind map format and debrief session.

First session was done by her. As we hitted very soon unexpected behaviour (this is why Lufthansa app is chosen), we got a hit of massive data, that we needed to note in some way. Note were made on whiteboard, but not in form of mindmup. This also happens to me when I do not use mind map program to make notes. Writing mindmaps on paper is hard for me.

In second session, Alex had a driver and point was to get driver experience of this session. Driver noted that it was hard for him to hold its testing ideas while waiting for new instructions.

Takeaway

Taking notes during exploratory session is hard!

# Talk Monitoring in production by Mirjana Kolarov.

[tweet id=”965927006830546944″ align=”center”]

Mirjana presented which tools she found useful in her project where they set up monitoring of production environment:

zabbix, kibana, new relic and brain!

Those tools helps you to aggregate application logs. But prior to that setup, you must agree with the team what is actual that that will be monitored.

Takeaways

Error fails are fast (most of them)

When dashboard is empty it means that log aggregator is down.

Have backup option, in this case kibana and newrelic.

#Open sessions.

Number of participants for open sessions was huge!

[tweet id=”965938994637950976″ align=”center”]

and here are proposed sessions:

I attended Gojko’s and Richard’s session how to use mind maps in daily work.

How to use mind maps for knowledge sharing?

For me, problem with mind maps is when I need to connect two different hierarchies. Just using connectors is not enough, because that connection can also hold hierarchy data. I also learned that some people can better understand mind maps, and some better understand webspider structure. Richard is using mind maps in order to map the application model. The last leafs in those mind map models represent actual interaction instructions with the application (click this, input this).

Second session was discussion how to start load testing. I presented my way of working. Record your scenario as http request, export it in some language, edit it so data becomes random, apply the load. Edit part is not automatic and you can ask developers to help you with edit (programming part). Scott Baber has excellent resources about load testing.

Third one was Taking notes during the exploratory session. Nobody was interested in this session, and by chance I got one on one session with Maaret Pyhäjärvi, she showed me her way of working, so I got some new ideas for my session taking notes.

# Keynote Testing vs crowdsource data by Dr. Pamela L. Gay.

Dr. Pamela explained challenges in her project how to filter data received from the crowd when data are satellite images of earth (or Mars) that needs to be categorized. Very interesting topic.

That is it. Customer satisfaction for second day: 4.4/5.