TL;DR

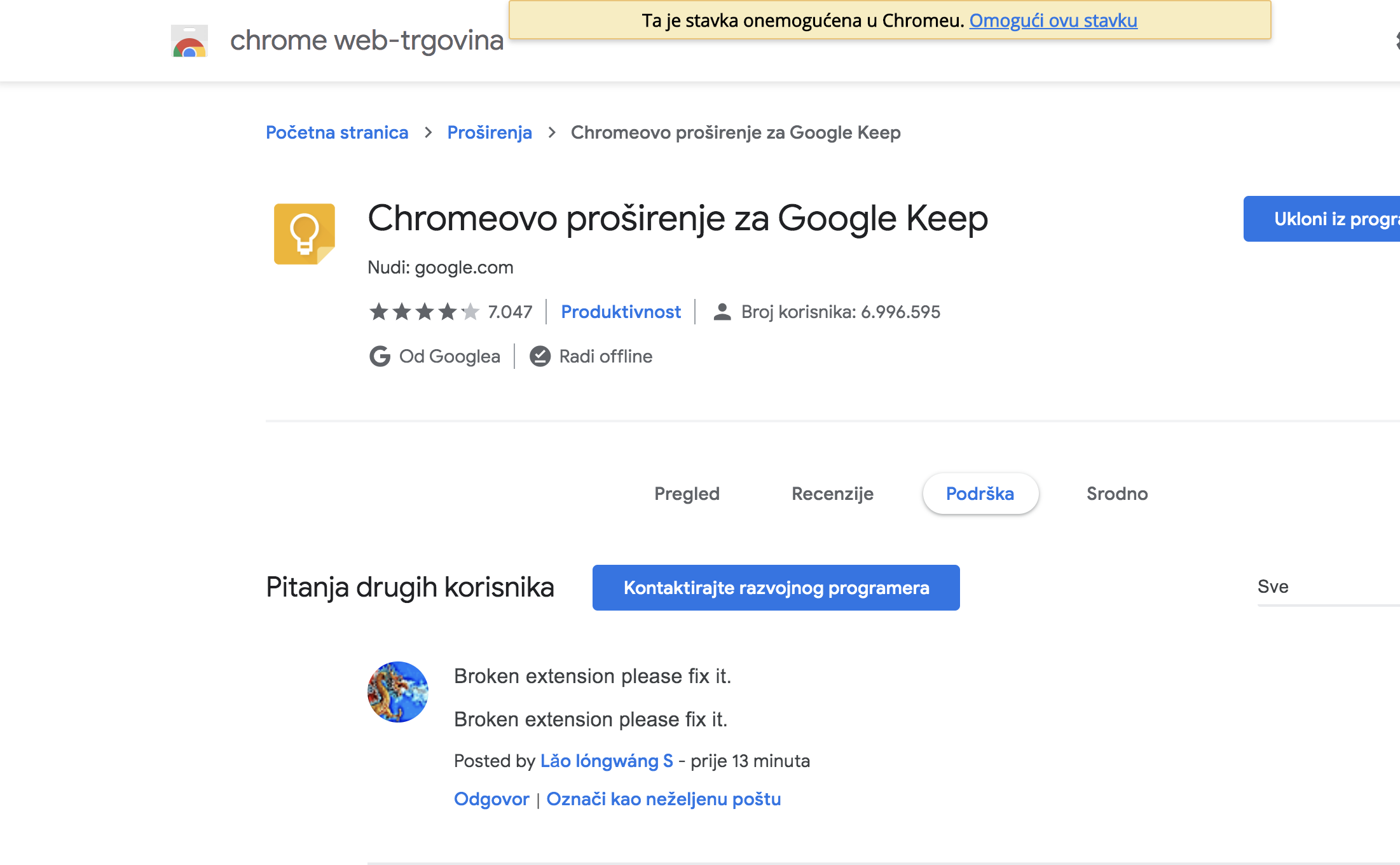

There is a particular type of software that we need to test. Software that runs in another popular software. We give an example of an issue of Google Keep, a very popular Chrome Extension.

We can extend the Chrome feature set with extensions. The extension is the javascript code, and there are strict rules and API for developing such software. The main reason is user security. You do not want an extension that would send your credit card data to the hacker.

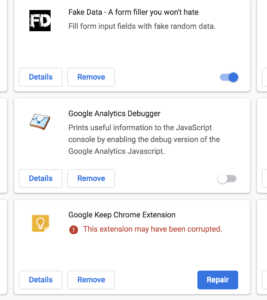

Keep extension enables you to easily manage your Browser bookmarks in the cloud. I have been using it for some time. This weekend, when I wanted to add a bookmark, I noticed that the Keep icon just disappeared. I open the Chrome extensions page and found the message that extension may have been corrupted.

I clicked repair, and nothing happened. Two things are an issue with this issue:

- How a problem is reported. Removing Keep Icon does not communicate well that extension is broken.

- Keep is created by Google, just like Chrome. Automated Chrome update broke Keep extension. This could reveal some information about Google testing.

Keep is a popular extension with 6.8 million users. It is free software. We got the following examples of broken consistency heuristics:

- History where new Chrome version broke Keep

- The image that Google wants to project to the public and that is no devastating issues with their software in production.

Regression testing does not need large sets of automated checks. Smart usage of A FEW HICCUPPS [Bolton] could have prevented this Keep issue without automation checks.