TL;DR

I attended the Rapid Software Testing workshop led by Michael Bolton at STP Las Vegas in 2010. It completely blew me away with its many small but powerful pieces of testing wisdom. The workshop was intense, fast-paced, and packed with information.

As course materials, we received only the presentation slides and a large collection of reference links. Now that the book Rapid Software Testing has finally been published, I have the opportunity to revisit all that wisdom — and everything I’ve learned and reflected on over the past fifteen years — quietly, in my study, and at my own pace.

Below are my takeaways from Chapter One.

I would really like to discuss these thoughts with you, so please feel free to reach out to me on LinkedIn. Zeljko Filipin proposed a book club, so I assume he’ll be joining the discussion as well.

Intro

Another important point is that I now also have access to James Bach’s thoughts and insights on Rapid Software Testing. I preordered the paper version of the book, which also gives me a small workout — carrying around a 500+ page, 2-kilogram book has its benefits.

I’m taking notes using my pet project, Boty, a Telegram bot manager. It allows me to capture and organize my notes directly through a Telegram bot and the Telegram mobile app, whenever an idea strikes.

Let’s start.

Acknowledgements

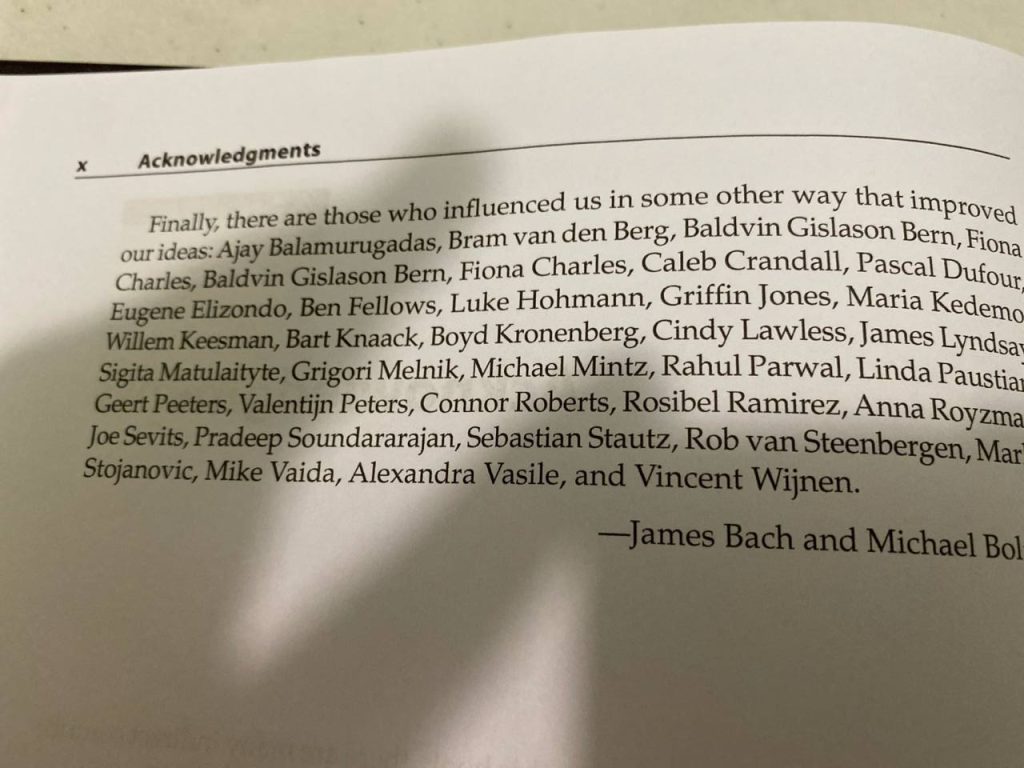

On page X, I found a bug! James and Michael love Fiona Charles so much (myself included) that she is acknowledged twice. Knowing them, that might even be intentional.

All files accompanying the book are available for download.

No one is authority on software testing.

I agree with this Rapid Software Testing statement. For example, I completed all four AST BBST Testing courses. I have signed certificates, but I have never considered myself a testing authority within the Testival community (or even in my stand-up comedy circle).

I can talk at length about context-driven testing and how I’ve applied it across various projects. However, telling fellow testers that this is the only correct way simply doesn’t hold water. Context-driven testing is how I personally understand and practice testing, because it has consistently produced the best results for me.

I was once in the midst of the ISTQB community, and as far as I remember, their biggest achievement was the number of certificates sold. The atmosphere in that room felt off — there was a lot of bad energy flowing through it.

Many cultures of software testing.

According to the Oxford Dictionary of English, culture means “the distinctive ideas, customs, social behaviour, products, or way of life of a particular nation, society, people, or period.” This definition sets the tone of the book. Taking Software Seriously is, at its core, a book about philosophy.

Testing cultures are divided into five testing schools:

- Analytics

- Factory

- Agile / DevOps

- Quality

- Context-Driven

For example, in the Agile / DevOps school, developers are responsible for testing. In the Quality school, everybody is responsible for quality — although the obvious question is: what exactly is quality?

The Factory school centers testing around algorithms and artifacts. At one point in my career, I even created a test case document that was over 1,000 pages long.

The Analytics school prevails mostly in academia and treats testing as part of computer science, something that can be described as a largely algorithmic process.

In context driven school, there are no best practices, but skills to design and justify test practices that fit their project.

Every practice used to solve a problem is fallible. A practice may be useful in the context of your project, but it may also fail. You need to apply and practice it in order to develop the skill required to use it effectively.

This is a key difference from situations where some “authority” claims that their practice is the best one. When that practice fails in your project, the failure is blamed on you — supposedly because you lack the skill to apply it correctly. The practice itself is never questioned. It becomes a kind of “holy practice.”

This quote clearly separates the context-driven school of testing from the other schools:

Software testing is social, psychological and heuristic process

Other testing schools often do not take into account the social and psychological aspects of testing. Yet if you’ve done any practical testing in your career, you have almost certainly influenced developers socially and psychologically — often without intending to.

We must be very careful about how we communicate bad news about discovered bugs. One basic principle is to never connect a bug directly to a person. Saying “You made this bug” is a very poor way to communicate a problem that the team needs to solve.

I like a story shared by Irja Straus. At a testing conference, she met a developer who was astonished by how much testers in the software testing community care about their colleagues’ emotions and psychological well-being. He asked her, “Why are you so nice to each other at these events?”

The paradigm

Rapid Software Testing is about paradigms. A paradigm is a typical example or pattern of something — a model. When you test software, you create a mental model of the product you are testing.

In that sense, we are in the business of creating a marketplace for testing ideas. The more paradigms we have, the more perspectives we can apply.

The real question is: how many puzzles can we solve with our paradigms?

In rapid software testing focus is on people, skills, heuristics and ethics.

Again — people. Not artifacts. Not lines of code. People. Our teams consist of people, and we create products for people.

Skills matter. We use heuristics to solve problems, and while we use them, we develop our skills as testers.

And we must not forget about ethics in software testing. Can software harm people because of a bug — or even because it does exactly what it was designed to do?

Testing with fixed algorithm vs open investigation

Rapid Software Testing clearly distinguishes between two types of testing. In automated testing, you rely on fixed algorithms. In Rapid Software Testing, testing is an open investigation.

You test, discover something new, and if that discovery is important in the context of the project, you continue investigating in that direction. If time runs out, you share what you’ve learned and explain where further investigation is needed — along with the risks of stopping at that point.

A bunch of people with beliefs

This is your project team. Everyone has beliefs about the product; as testers, our role is to provide information about what the product really is.

Good testing touches upon deep elements of philosophy and humanity

And this is why some testers do not like rapid software testing, for them is like some wodo/philosophy magic. You do not teach how to test, you are just bunch of philosophers!

Testing turns philosophy into working tool

This is an explanation of how this “voodoo” or philosophy actually works in Rapid Software Testing.

We do not avoid doing testing.

When you get a list of test cases and finish your batch, testing stops. Rapid Software Testing teaches us that testing never really stops — there is always something new to test.

Release and user will report issues, it will work in some context.

There are schools of thought that support the idea of “let’s release and users will report the issues.” This approach can work in some contexts, but in others it clearly will not.

For example, it may work when you have friendly or forgiving users. It does not work when you deal with critical data or sensitive domains, or when you operate in a highly competitive product market.

We can prevent all bugs but to know that all are prevented you need testing.

I couldn’t agree more. So the next time you’re asked why we need testing, consider using this philosophical statement — similar to the classic chicken-and-egg problem.

Testing begins with faith of product trouble.

This is the root of the tester mindset. Some teammates argue that there is no such thing as a tester mindset. Yet, in practice, they often approach the product in good faith: it must work, so I won’t even bother to test it.

As testers, we take a different stance. We do not assume the product is trouble-free. We actively try to discover whether there are problems, so that the team can address them before users are affected.

Testing is open ended process.

This does not mean that testing never stops. Testing stops when there is no more time available. The time you are given should be used to find the most important product problems — the bugs that matter most.

When we are done, when we have enough evidence about truth in product.

This is another way we claim that testing is done: “Here is my test report. It contains the truth, the whole truth, and nothing but the truth about our product. So help me God!”

Something very similar to the oath taken by a U.S. President.

Fake testing is testing were testers are discouraged to find more bugs and reporting them with additional paperwork of test cases. There are ceremonies instead of testing.

To be honest, I have done fake testing. Once, I created a test case document that was over 1,000 pages long. It was required by the contract. I was effectively forced by the client to produce fake testing.

If you ever find yourself in a similar situation, be honest with yourself and say it out loud: I am a fake tester.

Everybody should test, but they have their main jobs, like programming, so testing is not done well.

Yes, everyone can test, but that testing will not be as effective as testing done by a dedicated tester. Other team members already have their primary responsibilities — programming, leading the team, managing delivery. Testing is not their main job.

As testers, it is also very tempting to drift into other activities: DevOps work, spinning up servers, writing build scripts. These activities can support testing, but they are not testing themselves. There are other roles that specialize in that work.

This brings us to the five vital qualities of a tester:

Empirical

We check actual behavior through clever experiments. We are in the business of creating a marketplace of experiments.

Skilled

We apply what we have learned about testing — from experience, from books like this one, and from courses such as BBST.

Different

We actively seek trouble instead of relying on positive thinking. This does not mean breaking the product like children breaking toys. It means searching for the most important product problems related to customer needs — problems that may not technically break the system. For example: is double spending possible with a user account?

Motivated

We are motivated to find bugs. When faced with a choice between having a beer with friends or spending one more hour trying to uncover important problems, the choice is clear.

Available

We are available to do testing — and nothing more. This is our core purpose on any project: to test the product.

Follow any rumor on product risk.

When you hear about a potential product risk during a meeting — or even during a coffee or smoke break — note it down. Come up with heuristics and run experiments.

This is how we hunt for the most important bugs with the time we have.

Prepare deep testing.

After you scratch the surface of the product and get a hunch that something might be related to a product risk, it’s time to dig deeper and do deep testing.

The first chapter concludes with the one and only Gerald M. Weinberg — one of Michael’s and James’s key inspirations.

Each test should done some work that was not done by previous tests.

When you feel the need to “count” your tests, this is an excellent heuristic for identifying and removing duplicate tests.

And that concludes Chapter One.

I took 33 notes as the basis for this post. Thirty-three pieces of wisdom from just thirteen pages — not bad at all.